As we get closer to the final release of ArangoDB 3.0 you can now test our brand new cluster capabilities with 3.0 alpha5.

In this post you will find a step-by-step instruction on how-to get a 3.0 alpha3 cluster up and running on Mesosphere DC/OS for testing. Any feedback is well appreciated. Please use our Slack Channel “feedback30” for your hints, ideas and all other wisdom you´d like to share with us.

Please note that we are well aware of the “clunkyness” of the current cluster setup in 3.0alpha5. The final release of 3.0 will make it much easier.

Launching the DC/OS cluster on AWS

Go to this page and choose a cloud formation template in a region of your choice. It does not matter whether you use “Single Master” or “HA: Three Master”. Once you click you will be taken to the AWS console login and then be presented with various choices. Do not bother with public slaves, just select a number of private slaves.

This will take approximately 15 minutes and in the end you have a Mesos Master instance and some Mesos Agents.

In the AWS console on the “CloudFormation” page under the tab “Outputs” you find the public DNS address of the service router, something like

ArangoDB3-ElasticL-A6TTKUWKO1AO-1880801302.eu-west-1.elb.amazonaws.com

You can now point your browser to the above DNS address of the service router to see the DC/OS web UI.

Aquire the public IP of your cluster from the UI (printed on the top left in the DC/OS UI below the clusters name).

You will need this to setup an ssh tunnel to access your ArangoDB coordinators. We are hard at work to fix this nuisance by integrating a reverse proxy into our Mesos framework scheduler.

Setting up an sshuttle proxy to access servers

Install sshuttle from its github repository (https://github.com/sshuttle/sshuttle), you need a reasonably new version for this to work with DC/OS.

Make sure that the PEM file associated with the DC/OS cluster is loaded into ssh:

ssh-add /home/<USER>/.ssh/<AWS-PEM-FILE>.pem

Then issue:

sshuttle --python /opt/mesosphere/bin/python -r core@<PUBLIC-IP> 10.0.0.0/8

(replacing the machine address with the one of your Mesos master, see above). Do not forget the core@ prefix. You will need the private ssh key which you used to create the DC/OS cluster.

This forwards all requests to local IP addresses in the range 10.0.0.0/8 over an ssh connection into your DC/OS cluster. It is necessary to conveniently reach Marathon with the curl utility, to inspect the sandboxes of your ArangoDB tasks and to access the web UIs of your ArangoDB coordinators.

Launching an ArangoDB3 cluster on DC/OS

Just use Marathon and the following JSON configuration arangodb3.json:

{

"id": "arangodb",

"cpus": 0.125,

"framework-name": "arangodb",

"mem": 4196.0,

"ports": [0, 0],

"instances": 1,

"args": [

"framework",

"--framework_name=arangodb",

"--master=zk://master.mesos:2181/mesos",

"--zk=zk://master.mesos:2181/arangodb",

"--user=",

"--principal=princ",

"--role=arangodb",

"--mode=cluster",

"--async_replication=false",

"--minimal_resources_agent=mem(*):512;cpus(*):0.125;disk(*):512",

"--minimal_resources_dbserver=mem(*):4096;cpus(*):1;disk(*):4096",

"--minimal_resources_secondary=mem(*):4096;cpus(*):1;disk(*):4096",

"--minimal_resources_coordinator=mem(*):4096;cpus(*):1;disk(*):4096",

"--nr_agents=1",

"--nr_dbservers=5",

"--nr_coordinators=5",

"--failover_timeout=30",

"--secondaries_with_dbservers=true",

"--coordinators_with_dbservers=true",

"--arangodb_privileged_image=false",

"--arangodb_image=arangodb/arangodb-preview-mesos:3.0.0a5"

],

"env": {

"ARANGODB_WEBUI_HOST": "",

"ARANGODB_WEBUI_PORT": "0",

"MESOS_AUTHENTICATE": "",

"ARANGODB_SECRET": ""

},

"container": {

"type": "DOCKER",

"docker": {

"image": "arangodb/arangodb-preview-mesos-framework:3.0.0a4",

"forcePullImage": true,

"network": "HOST"

}

},

"healthChecks": [

{

"protocol": "HTTP",

"path": "/v1/health.json",

"gracePeriodSeconds": 3,

"intervalSeconds": 10,

"portIndex": 0,

"timeoutSeconds": 10,

"maxConsecutiveFailures": 0

}

]

}

Edit the following fields to your needs:

- `--nr_dbservers=5` to change the number of database servers, you should

use at most as many as your DC/OS cluster has nodes

- `--nr_coordinators=5` to change the number of coordinators, you should

use at most as many as dbservers, our standard configuration is to

use the same number.

Make sure that your instances are large enough to satisfy the resource requirements specified in the --minimal-resources* options.

Do not change the name of the Docker images.

Aquire the internal IP address of the node running marathon by clicking on

System => Marathon

in the DC/OS UI. We will use 10.0.7.72 in this example.

curl -X POST -H "Content-Type: application/json" http://10.0.7.72:8080/v2/apps -d @arangodb3.json --dump - && echo

You should then see the ArangoDB cluster start up. It should appear as a Marathon service offering a web UI. From there you should be able to reach your coordinators, once the cluster is healthy. Furthermore you should see tasks on the Mesos console (use

http://ArangoDB3-ElasticL-A6TTKUWKO1AO-1880801302.eu-west-1.elb.amazonaws.com/mesos

with your service router URL. Your cluster is ready.

Shutting down the ArangoDB3 cluster

This needs a bit of care to make sure that all resources used by ArangoDB are properly freed. Therefore you have to find out the endpoint of ArangoDB’s web UI. This can be found by opening Services => marathon. On the following page you will find an application called “arangodb”. On the bottom of it you will find two links containing links to the two ports of the arangodb framework. The first one is pointing to the web ui of the framework scheduler and to properly clean up any state in our mesos cluster we will need this one (first IP) and the marathon IP (second IP):

curl -X POST -H "Content-Type: application/json" http://10.0.0.253:15091/v1/destroy.json -d '{}' ; curl -X DELETE http://10.0.7.72:8080/v2/apps/arangodb

Please perform both commands in one command line to make sure that the ArangoDB service is deinstalled in Marathon as soon as it is properly shut down.

Here is an awesome

Here is an awesome

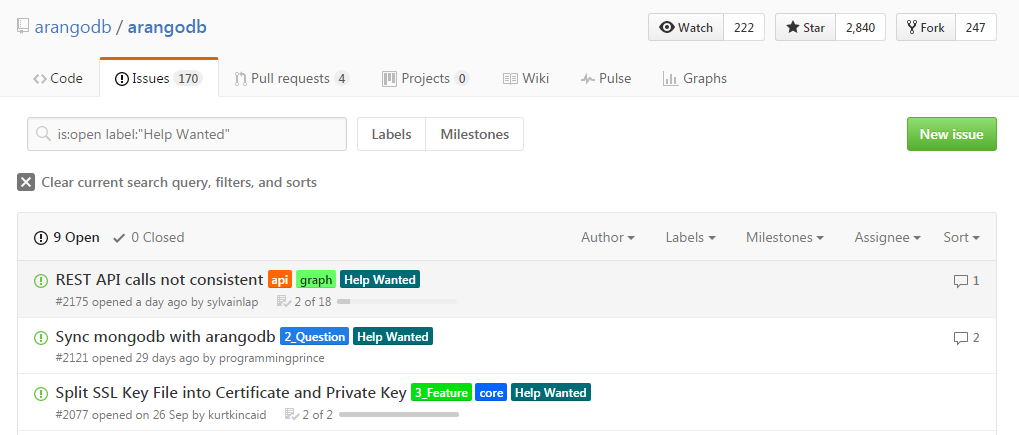

) Especially that we have recently received quite a few requests on how one can contribute to ArangoDB in an easy and quick way, we have decided that the time has come to get closer to our community and get even more involved.

) Especially that we have recently received quite a few requests on how one can contribute to ArangoDB in an easy and quick way, we have decided that the time has come to get closer to our community and get even more involved.